Image-based Talking Head from a single image

Image Talk: a chinese text-to-speech talking head

VR Talk: a speech driven talking head

27 Feb. 1999 updated by I-Chen Lin.

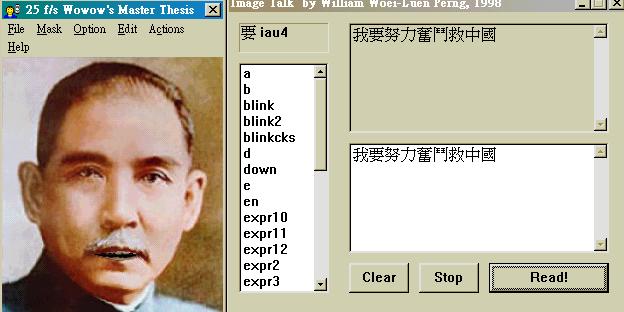

Because of the emergence of MPEG-4, face synthesis gets more and more attentions. These synthetic faces can be used in virtual communication such as virtual meeting, virtual video e-mail etc.; the technique can also be a new friendly user interface in various applications. Most approaches try to synthesize one's facial expressions and motions with 3D models. These are fit in applications needed to accurately display one's motions. However, other applications are emphasised to have realistic facial expressions. Using image-based 2D mesh talking head can be a simple and realistic solution as in Figure1.

Figure.1 (a) original image (b) pronouncing "u" (c) pronouncing "en" (d) downhearted Monalisa

In this research, we proposed a talking head system which can generate facial expressions by controlling a 2D mesh model and is based on a single facial image. Our talking head currently can be driven by two ways: text or speech. Besides, this talking head can do eye blinkings and small-scale head rotations and translations controlled by a random mechanism.

The performance is about 30 frames/sec in CIF format on a Pentium II 233MHz PC.

Image Talk: a chinese text-to-speech talking head

Image Talk is a chinese text-to-speech talking head. Users can type chinese sentences and text into the control panel, and the talking head can display the proper facial expressions and have the corresponded speech.

In order to make the talking head make Chinese speech, key frames needed for each syllable and words voice data are stored in Image Talk's database. After analyzing the input sentence, Image Talk retrieves syllables and produce a smooth animation with corresponding sound. The results would be like a talking head giving a speech.

VR Talk: a speech driven talking head

VR Talk is a speech driven talking head. Users can give speech to the system through microphone or wave file; VR Talk then use speech recognition techniques to get pronunciations and conjectures of the speech.

Currently, we use Microsoft Speech SDK 4.0's DirectSpeechRecognition API as our speech recoginition API.

Download: ImageTalk_paper.pdf

Donwload: VRTalk_paper.pdf

Advisor - Prof. Ming Ouhyoung

Chien-Chang Ho (PhD student)

Yung-Kang Wu (master student)

I-Chen Lin (master student)

Woei-Luen Perng (graduated)