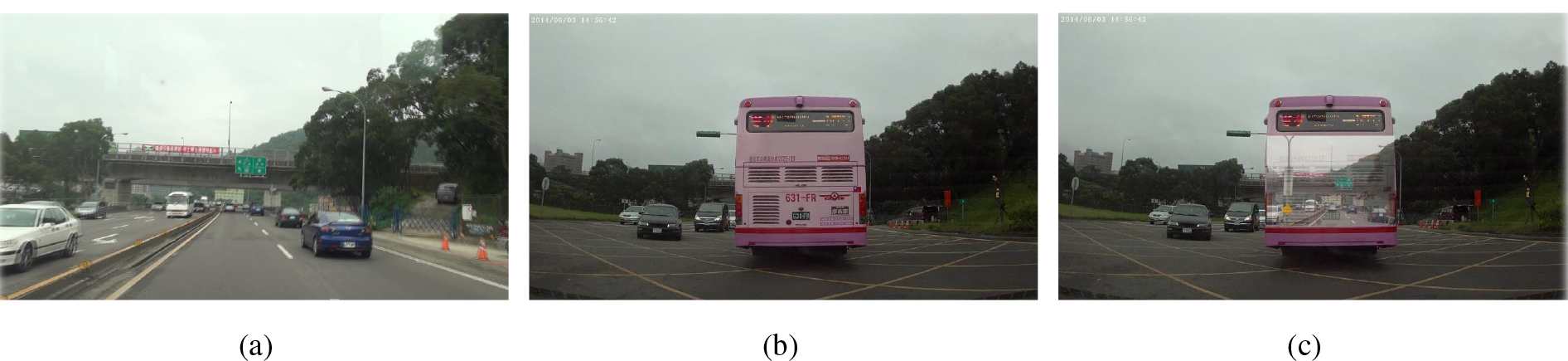

A synthesis result of the proposed view sharing system. (a) The

original view of the preceding vehicle. (b) The original view of the

subject vehicle with a large portion of the image blocked by the

preceding vehicle. (c) The perspective of the preceding vehicle is

transferred to the corresponding view of the subject vehicle to

“disocclude” the blocked area as if the preceding vehicle becomes

transparent.

Visual obstruction caused by a preceding

vehicle is one of the key factors threatening driving safety. One

possible solution is to share the first-person-view of the preceding

vehicle to unveil the blocked field-of-view of the following vehicle.

However, the geometric inconsistency caused by the camera-eye

discrepancy renders view sharing between different cars a very

challenging task. In this paper, we present a first-person-perspective

image rendering algorithm to solve this problem. Firstly, we contour

unobstructed view as the transferred region, then by iteratively

estimating local homography transformations and performing

perspective-adaptive warping using the estimated transformations, we

are able to locally adjust the shape of the unobstructed view so that

its perspective and boundary could be matched to that of the occluded

region. Thus, the composited view is seamless in both the perceived

perspective and photometric appearance, creating an impression as if

the preceding vehicle is transparent. Our system improves the driver’s

visibility and thus relieves the burden on the driver, which in turn

increases comfort. We demonstrate the usability and stability of our

system by performing its evaluation with several challenging data sets

collected from real-world driving scenarios.

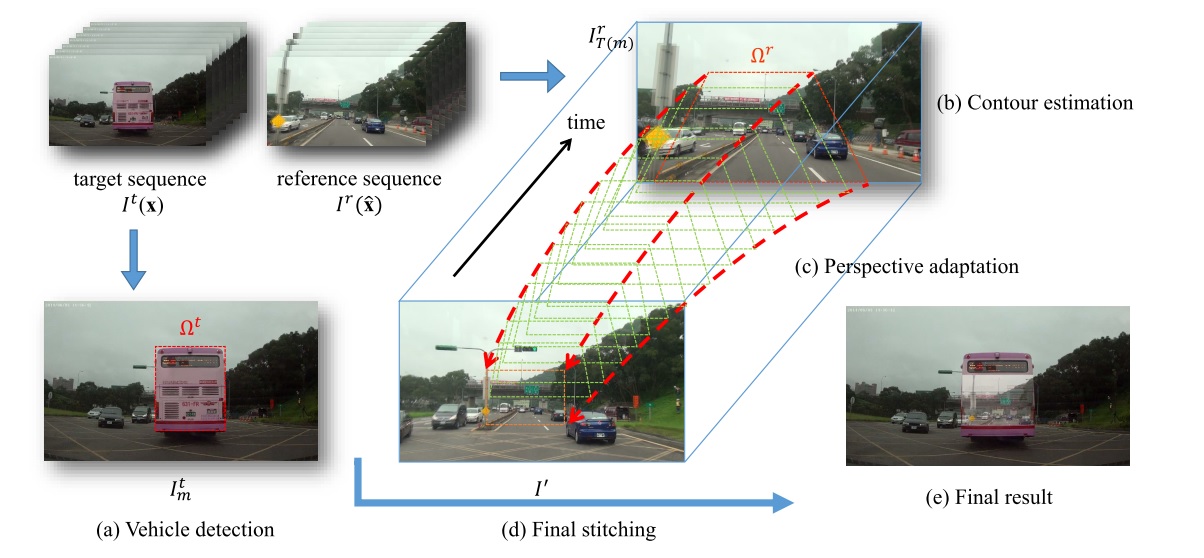

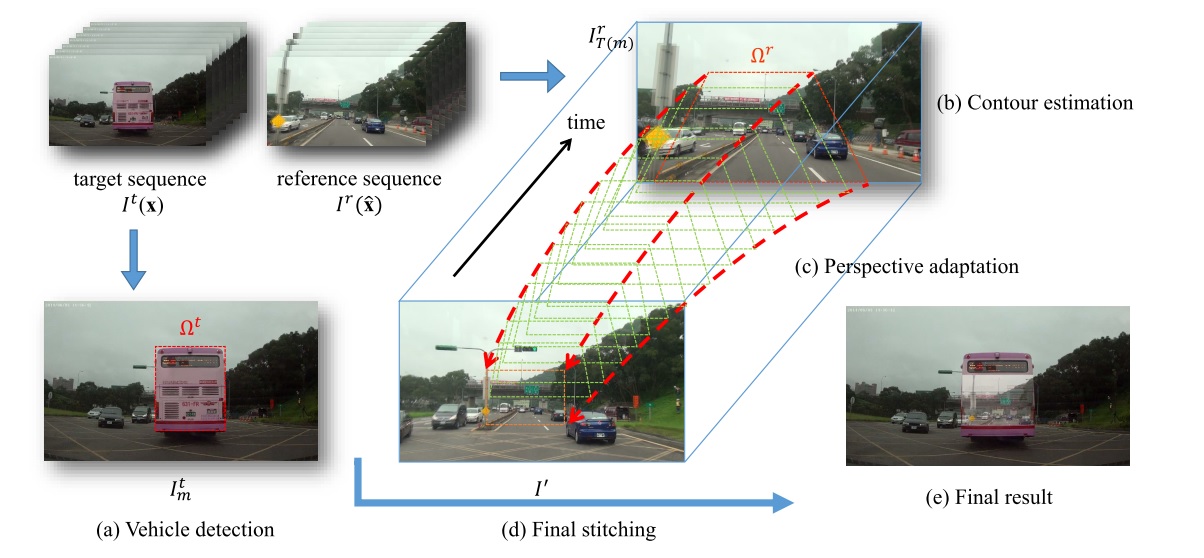

An overview of the proposed method. Given the target and

reference sequences, the occluded region (a) in the target image is

estimated and our system automatically finds the corresponding contour

(b) in the reference image. To transform the area inside the contour in

the reference image to match the occluded region in the target image,

the perspective of the region to be transformed are adapted to fit that

of the location in the target image by performing (c) perspective

adaptation through reference video volume and (d) a stitching process

between the two image frames. In the stage of perspective adaptation, a

novel view I′ is synthesized by performing local homography estimation

and perspective-aware warping. Finally, we stitch the synthesized view

and target image where the warped region is seamlessly blended into the

target image to make an impression that the vehicle is transparent (e).

Note that the “see-through” effect does not cover the entire occluded

region such that the viewers remain consciously aware of the existence

of the preceding vehicle, thus improving driving safety.

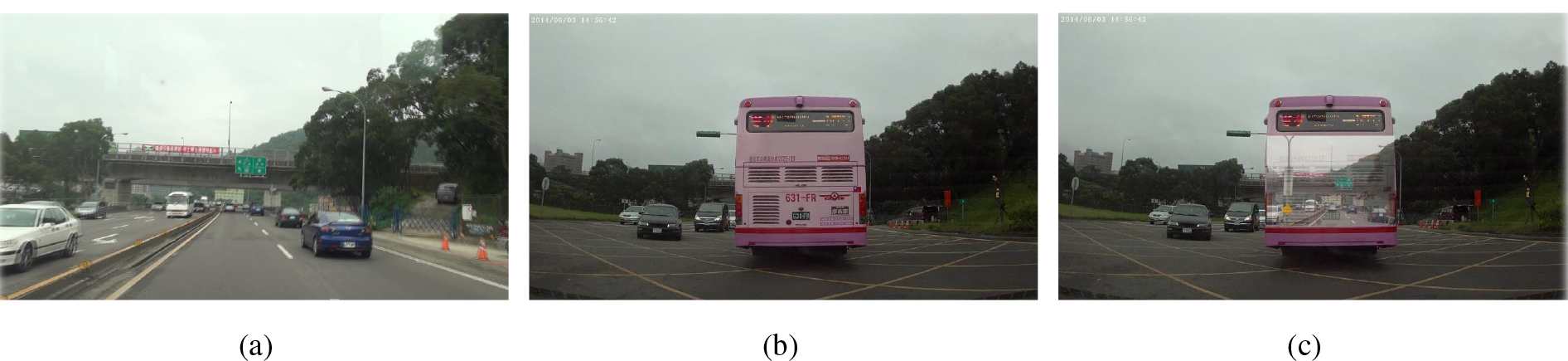

A synthesis result of the proposed view sharing system. (a) The

original view of the preceding vehicle. (b) The original view of the

subject vehicle with a large portion of the image blocked by the

preceding vehicle. (c) The perspective of the preceding vehicle is

transferred to the corresponding view of the subject vehicle to

“disocclude” the blocked area as if the preceding vehicle becomes

transparent.

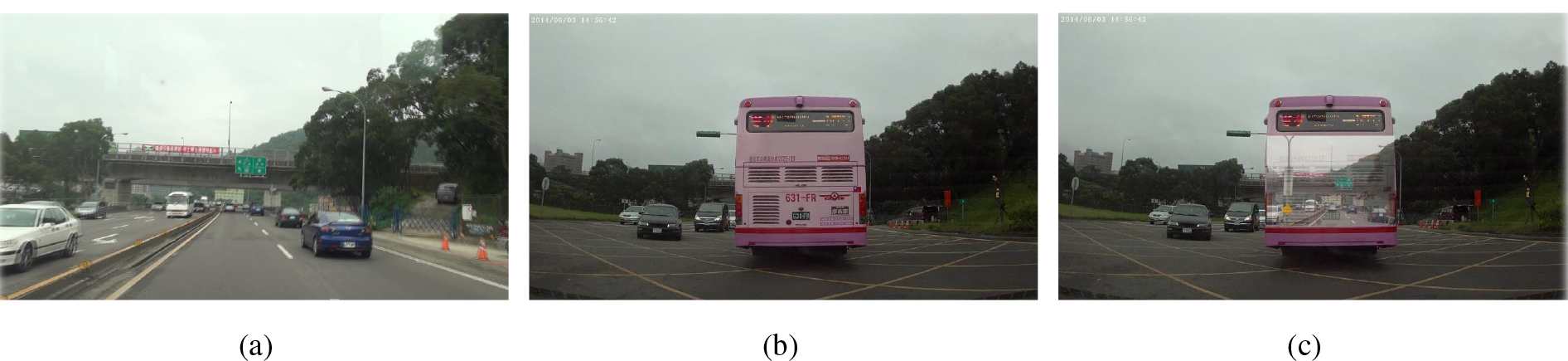

A synthesis result of the proposed view sharing system. (a) The

original view of the preceding vehicle. (b) The original view of the

subject vehicle with a large portion of the image blocked by the

preceding vehicle. (c) The perspective of the preceding vehicle is

transferred to the corresponding view of the subject vehicle to

“disocclude” the blocked area as if the preceding vehicle becomes

transparent.

A synthesis result of the proposed view sharing system. (a) The

original view of the preceding vehicle. (b) The original view of the

subject vehicle with a large portion of the image blocked by the

preceding vehicle. (c) The perspective of the preceding vehicle is

transferred to the corresponding view of the subject vehicle to

“disocclude” the blocked area as if the preceding vehicle becomes

transparent.

A synthesis result of the proposed view sharing system. (a) The

original view of the preceding vehicle. (b) The original view of the

subject vehicle with a large portion of the image blocked by the

preceding vehicle. (c) The perspective of the preceding vehicle is

transferred to the corresponding view of the subject vehicle to

“disocclude” the blocked area as if the preceding vehicle becomes

transparent.

An overview of the proposed method. Given the target and

reference sequences, the occluded region (a) in the target image is

estimated and our system automatically finds the corresponding contour

(b) in the reference image. To transform the area inside the contour in

the reference image to match the occluded region in the target image,

the perspective of the region to be transformed are adapted to fit that

of the location in the target image by performing (c) perspective

adaptation through reference video volume and (d) a stitching process

between the two image frames. In the stage of perspective adaptation, a

novel view I′ is synthesized by performing local homography estimation

and perspective-aware warping. Finally, we stitch the synthesized view

and target image where the warped region is seamlessly blended into the

target image to make an impression that the vehicle is transparent (e).

Note that the “see-through” effect does not cover the entire occluded

region such that the viewers remain consciously aware of the existence

of the preceding vehicle, thus improving driving safety.

An overview of the proposed method. Given the target and

reference sequences, the occluded region (a) in the target image is

estimated and our system automatically finds the corresponding contour

(b) in the reference image. To transform the area inside the contour in

the reference image to match the occluded region in the target image,

the perspective of the region to be transformed are adapted to fit that

of the location in the target image by performing (c) perspective

adaptation through reference video volume and (d) a stitching process

between the two image frames. In the stage of perspective adaptation, a

novel view I′ is synthesized by performing local homography estimation

and perspective-aware warping. Finally, we stitch the synthesized view

and target image where the warped region is seamlessly blended into the

target image to make an impression that the vehicle is transparent (e).

Note that the “see-through” effect does not cover the entire occluded

region such that the viewers remain consciously aware of the existence

of the preceding vehicle, thus improving driving safety.